Artificial intelligence has come a long way since scientists first wondered if machines could think.

In the 20th century, the world became familiar with artificial intelligence (AI) as sci-fi robots that could think and act like humans. By the 1950s, British scientist and philosopher Alan Turing posed the question “Can machines think?” in his seminal work on computing machinery and intelligence, where he discussed creating machines that can think and make decisions the same way humans do (reference 1). Although Turing’s ideas set the stage for future AI research, his ideas were ridiculed at the time. It took several decades and an immense amount of work from mathematicians and scientists to develop the field of artificial intelligence, which is formally defined as “the understanding that machines can interpret, mine, and learn from external data in a way that imitates human cognitive practices” (reference 2).

Even though scientists were becoming more accustomed to the idea of AI, data accessibility and expensive computing power hindered its growth. Only when these challenges were mitigated after several “AI winters” (with limited advances in the field) did the AI field experience exponential growth. There are now more than a dozen types of AI being advanced (figure).

Due to the accelerated popularity of AI in the 2010s, venture capital funding flooded into a large number of startups focused on machine learning (ML). This technology centers on continuously learning algorithms that make decisions or identify patterns. For example, the YouTube algorithm may recommend less relevant videos at first, but over time it learns to recommend better-targeted videos based on the user’s previously watched videos.

The three main types of ML are supervised, unsupervised, and reinforcement learning. Supervised learning refers to an algorithm finding the relationship between a set of input variables and known labeled output variable(s), so it can make predictions about new input data. Unsupervised learning refers to the task of intelligently identifying patterns and categories from unlabeled data and organizing it in a way that makes it easier to discover insights. Lastly, reinforcement learning refers to intelligent agents that take actions in a defined environment based on a certain set of reward functions.

Deep learning, a subset of ML, had numerous ground-breaking advances throughout the 2010s. Similar to the connections between the nervous system cells in the brain, neural networks consist of several thousand to a million hidden nodes and connections. Each node acts as a mathematical function, which, when combined, can solve extremely complex problems like image classification, translation, and text generation.

Impact of artificial intelligence

Human lifestyle and productivity have drastically improved with the advances in artificial intelligence. Health care, for example, has seen immense AI adoption with robotic surgeries, vaccine development, genome sequencing, etc. (reference 5). So far, the adoption in manufacturing and agriculture has been slow, but these industries have immense untapped AI possibilities (reference 6). According to a recent article published by Deloitte, the manufacturing industry has high hopes for AI because the annual data generated in this industry is thought to be around 1,800 petabytes (reference 7).

This proliferation in data, if properly managed, essentially acts as a “fuel” that drives advanced analytical solutions that can be used for the following (reference 8):

- becoming more agile and disruptive by learning trends about customers and the industry ahead of competitors

- saving costs through process automation

- improving efficiency by identifying processes’ bottlenecks

- enhancing customer experience by analyzing human behavior

- making informed business decisions, such as targeted advertising and communication (reference 9).

Ultimately, AI and advanced analytics can augment humans to help mitigate repetitive and sometimes even dangerous tasks while increasing focus on endeavors that drive high value. AI is not a far-fetched concept; it is already here, and it is having a substantial impact in a wide range of industries. Finance, national security, health care, criminal justice, transportation, and smart cities are examples of this.

AI adoption has been steadily increasing. Companies are reporting 56 percent adoption in 2021, an uptick of 6 percent compared to 2020 (reference 10). With technology becoming more mainstream, the trends of achieving solutions that emphasize “explainability,” accessibility, data quality, and privacy are amplified.

“Explainability” drives trust. To keep up with the continuous demand for more accurate AI models, hard-to-explain (black-box) models are used. Not being able to explain these models makes it difficult to achieve user trust and to pinpoint problems (bias, parameters, etc.), which can result in unreliable models that are difficult to scale. Due to these concerns, the industry is adopting more explainable artificial intelligence (XAI).

According to IBM, XAI is a set of processes and methods that allows human users to comprehend and trust the ML algorithm’s outputs (reference 11). Additionally, explainability can increase accountability and governance.

Increasing AI accessibility. The “productization” of cloud computing for ML has taken the large compute resources and models, once reserved only for big tech companies, and put them in the hands of individual consumers and smaller organizations. This drastic shift in accessibility has fueled further innovation in the field. Now, consumers and enterprises of all sizes can reap the benefits of:

- pretrained models (GPT3, YOLO, CoCa [finetuned])

- building models that are no-code/low-code solutions (Azure’s ML Studio)

- serverless architecture (hosting company manages the server upkeep)

- instantly spinning up more memory or compute power when needed

- improved elasticity and scalability.

Data mindset shift. Historically, model-centric ML development, i.e., “keeping the data fixed and iterating over the model and its parameters to improve performances” (reference 12), has been the typical approach. Unfortunately, the performance of a model is only as good as the data used to train it. Although there is no scarcity of data, high-performing models require accurate, properly labeled, and representative datasets. This concept has shifted the mindset from model-centric development toward data-centric development—“when you systematically change or enhance your datasets to improve the performance of the model” (reference 12).

An example of how to improve data quality is to create descriptive labeling guidelines to mitigate recall bias when using data labeling companies like AWS’ Mechanical Turk. Additionally, responsible AI frameworks should be in place to ensure data governance, security and privacy, fairness, and inclusiveness.

Data privacy through federated learning. The importance of data privacy has not only forged the path to new laws (e.g., GDPR and CCPA), but also new technologies. Federated learning enables ML models to be trained using decentralized datasets without exchanging the training data. Personal data remains in local sites, reducing the possibility of personal data breaches.

Additionally, the raw data does not need to be transferred, which helps make predictions in real-time. For example “Google uses federated learning to improve on-device machine learning models like ‘Hey Google’ in Google Assistant, which allows users to issue voice commands” (reference 13).

AI in smart factories

Maintenance, demand forecasting, and quality control are processes that can be optimized through the use of artificial intelligence. To achieve these use cases, data is ingested from smart interconnected devices and/or systems such as SCADA, MES, ERP, QMS, and CMMS. This data is brought into machine learning algorithms on the cloud or on the edge to deliver actionable insights. According to IoT Analytics (reference 14), the top AI applications are:

- predictive maintenance (22.2 percent)

- quality inspection and assurance (19.7 percent)

- manufacturing process optimization (13 percent)

- supply chain optimization (11.5 percent)

- AI-driven cybersecurity and privacy (6.6 percent)

- automated physical security (6.5 percent)

- resource optimization (4.8 percent)

- autonomous resource exploration (3.8 percent)

- automated data management (2.9 percent)

- AI-driven research and development (2.1 percent)

- smart assistant (1.6 percent)

- other (5.2 percent).

Vision-based AI systems and robotics have helped develop automated inspection solutions for machines. These automated systems have not only been proven to save human lives but have radically reduced inspection times. There have been significant examples where AI has outperformed humans, and it is a safe bet to conclude that several AI applications enable humans to make informed and quick decisions (reference 15).

Given the myriad additional AI applications in manufacturing, we cannot cover them all. But a good example to delve deeper into is predictive maintenance because it has such a large effect on the industry.

Generally, maintenance follows one of four approaches: reactive, or fix what is broken; planned, or scheduled maintenance activities; proactive, or defect elimination to improve performance; and predictive, which uses advanced analytics and sensing data to predict machine reliability.

Predictive maintenance can help flag anomalies, anticipate remaining useful life, and provide mitigations or maintenance (reference 17). Compared to the simple corrective or condition-based nature of the first three maintenance approaches, predictive maintenance is preventive and takes into account more complex, dynamic patterns. It can also adapt its predictions over time as the environment changes. Once accurate failure models are built, companies can build mathematical models to reduce costs and choose the best maintenance schedules based on production timelines, team bandwidth, replacement piece availability, and other factors.

Bombardier, an aircraft manufacturer, has adopted AI techniques to predict the demand for its aircraft parts based on input features (i.e., flight activity ) to optimize its inventory management (reference 18).

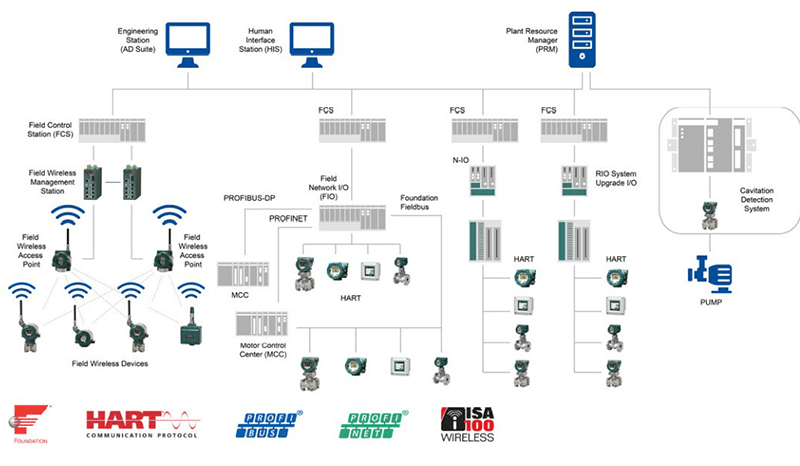

This example and others show how advances in AI depend on advances associated with other Industry 4.0 technologies, including cloud and edge computing, advanced sensing and data gathering, and wired and wireless networking.

(Courtesy of International Society of Automation)

About The Authors:

Ines Mechkane is the AI Technical committee chair of ISA’s SMIIoT Division. She is also a senior technical consultant with IBM. She has a background in petroleum engineering and international experience in artificial intelligence, product management, and project management. Passionate about making a difference through AI, Mechkane takes pride in her ability to bridge the gap between the technical and business worlds.

Manav Mehra is a data scientist with the Intelligent Connected Operations team at IBM Canada focusing on researching and developing machine learning models. He has a master’s degree in mathematics and computer science from the University of Waterloo, Canada, where he worked on a novel AI-based time-series challenge to prevent people from drowning in swimming pools.

Adissa Laurent is AI delivery lead within LGS, an IBM company. Her team maintains AI solutions running in production. For many years, Laurent has been building AI solutions for the retail, transport, and banking industries. Her areas of expertise are time series prediction, computer vision, and MLOps.

Eric Ross is a senior technical product manager at ODAIA. After spending five years working internationally in the oil and gas industry, Ross completed his master of management in artificial intelligence. Ross then joined the life sciences industry to own the product development of a customer data platform infused with AI and BI.

- SVS-Vistek Introduces High-Resolution Ultraviolet Machine Vision Cameras

- Support Expands for the Process Automation Device Information Model

- Collaboration is Key to an Automation Professionals’ Role in the Digital Revolution

- How Industrial Robots Can Reduce Stress for Factory-based Employees

- Basler Presents New 5GigE Product Range